Sprite-based Characters, as described in this tutorial, can have their talking animations match what they're saying - a process known as lip-syncing.

This tutorial is for characters animated with the Sprites Unity animation engine.

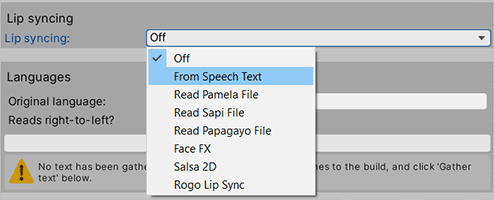

Lip-syncing works by gathering phoneme (or lip) shapes for a speech line, and using those shapes to animate the texture over time. We can choose how these phonemes are gathered with the Speech Manager's Lip syncing option.

What mode it is set to is up to you. From Speech Text requires no external files, and is good if you don't use speech audio, but the results are less accurate.

Read Pamela file will tell Adventure Creator to search for files generated by Pamela, much in the same way as it searches for audio files. Pamela is a Windows-based application and can be downloaded for free here. It is a good choice if you want full control over the phonemes.

Read SAPI file will tell Adventure Creator to search for files generated by Sapi, which is another free Windows application, and is available here.

For a full guide to the various lip-syncing options, see the Manual's Lip-syncing chapter.

To make use of Pamela and Sapi files, you must use also be using speech audio. This tutorial covers the process of preparing audio, and should be read first. Lip-syncing files are expected to be of the same name as their associated audio clip, but with a .txt extension, and placed in a Resources/Lipsync folder. For example, if an audio file Player2.mp3 is placed in Resources/Speech, it's lip-sync file Player2.txt must be placed in Resources/Lipsync.

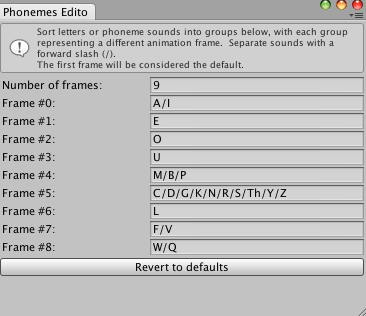

Regardless of which method you choose, you must then choose which phoneme is used by each frame of animation. In the Speech Manager, click Phonemes Editor.

Click Revert to defaults, and the editor will reconfigure itself to recommended defaults based on your chosen Lip-sync method - though you may have to tweak it further. Phonemes are separated by forward slashes.

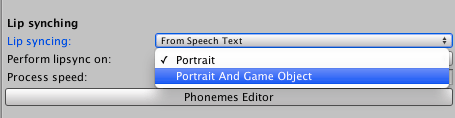

Once you have set up your phonemes, we can animate our Character sprite. In the Speech Manager, set Perform lipsync on to Portrait And Game Object.

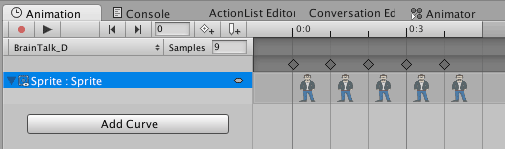

We now need to ensure our Character's talking animation is set up to match the phonemes we've declared. First, open the Animator window by choosing Window → Animation from the top toolbar.

Second, select the Character's sprite GameObject, and choose the animation you wish to check in the Animation window. Here, we're examining the animation BrainTalk_D.

Amend your animation to match the frames listed in the Phonemes Editor, and your Character can now use Adventure Creator's lip-syncing feature when talking.